Practices in Deep Learning

While working with deep learning models, it becomes quite irritating and frustrating sometimes when you try to find the optimal hyperparameters. Whether you start with the new architecture of your own, or take a proven architecture, or even start from the transfer learning, hyper parameters are every where.

So, after spending a lot of time and banging my head tuning the models, I discovered some must try practices. Heres the list:

Learning Rate:

- While using a pretrained model, that was trained on some other dataset example ImageNet for Image Classification: Don’t use the same learning rates for modelling the new datasets.

- Use Learning Rate Finder Learning Rate finder:

- Lets start with a tiny learning rate like 1e-10 and then exponentially increase it with each training step.

- Then train your network as usual.

- Do add the training loss to the summary and monitor it until it grow rapidly.

- Tensorboard is must: Visualize the training loss here.

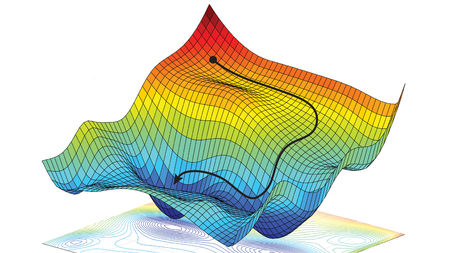

You need to find the section where loss is decreasing fastest, and use the learning rate that was being used at that training step. Github

- Don’t use a single learning rate throughout all the training steps, use something like cosine_decay. Cosine Annealing

Activation functions:

- I found that sigmoid_cross_entropy_with_logits works better than softmax for classifcation.

- Log Softmax works better than Softmax, though it is a scaled version of softmax but practically I found it works better and converges faster.